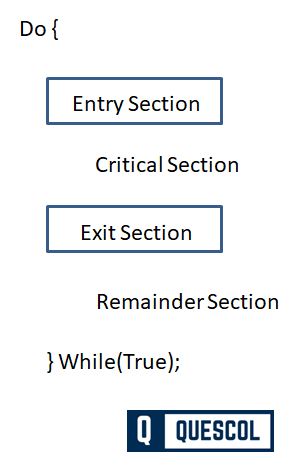

A contention situation arises if two independent programs or processes want to access common data, and this can lead to a collision. Code segments that access common data areas, are prone to collisions known as critical sections. A critical section is understood as program parts that must not be interrupted by other processes or threads while executed on the CPU.

It provided the processes or threads involved access shared resources. An atomic action is needed in a critical section i.e., a single process can run at a time in its critical section. All the entire processes have to wait to run in their critical sections.

What is Critical Section Problem?

The Critical Section Problem is a fundamental concept in operating systems and concurrent programming, addressing how multiple processes can access a shared resource, such as data in memory or a file, without leading to data inconsistency or corruption. It’s a key issue in the design and implementation of multitasking operating systems, where processes must be coordinated to ensure that only one process at a time can enter its critical section, where it accesses shared resources.

We can also say that a critical section is a code segment in a complete program where processes access shared resources. Shared resources can be a common variable or file. Suppose our code is performing some write operations on common variable.

As multiple processes are executing concurrently, any process can be interrupted in the mid of its execution. Due to partial execution on shared resources by processes I mean here we can say shared variables can lead to data inconsistencies. This situation is known as a race condition.

Race conditions lead to inconsistent states of data. Therefore, we need a synchronization protocol that allows processes to cooperate while manipulating shared resources, which essentially is the critical section problem.

Understanding the Critical Section

- Critical Section: A critical section is a part of a process that accesses shared resources (data structure, file, peripheral devices) that must not be concurrently accessed by more than one thread of execution.

- Problem Statement: Ensure that when one process is executing within its critical section, no other process is allowed to execute in its critical section.

Requirements for Critical Section Problems

Primary

- Mutual exclusion: If a process P1 is executing in a critical section then other processes can’t be executed in their critical section.

- Progress: If a process doesn’t require to run in a critical section, then it should not let wait indefinitely for other processes to take into the critical section.

Secondary

- Bounded waiting: Capable to forecast the waiting time for the entire process to take into the critical section.

- Architectural neutrality: When our solution is functioning perfectly on architecture, then it should execute on the other ones also. Hence, our technique must be architectural natural.

Solutions to the Critical Section Problem

Several synchronization mechanisms and algorithms have been developed to manage critical sections in operating systems:

- Locks and Mutexes: Basic locking mechanisms where a lock guards access to the critical section. A mutex (mutual exclusion) is a lock that allows only one thread to access the critical section at a time.

- Semaphores: More generalized than mutexes, semaphores can be used not only for mutual exclusion but also for coordinating access among multiple processes or threads. A semaphore can have a value greater than 1, allowing multiple entries into a critical section up to a set limit.

- Monitors: A high-level abstraction that provides a convenient and safe way to encapsulate mutexes and condition variables. Monitors consist of a mutex and zero or more condition variables for managing concurrent access to shared data.

- Peterson’s Solution: A classical software-based solution for two processes that use two variables: one to indicate if a process wants to enter its critical section and another to indicate the process giving priority if there’s a conflict.

- Turnstile Mechanism: Used in modern operating systems where a queue, or turnstile, is used to block threads when they cannot proceed, ensuring that threads are awakened in the order they were blocked.