- There are three types of Asymptotic Notation

- 1). Big-O Notation

- Constant c depends on the following factors

- The general stepwise procedure for Big-O runtime analysis is as follows

- Some of the useful examples on Big-O notation analysis are as follow

- According to Big O notation, we have five different categories of algorithms

- 2. Big Omega Notation

- Here’s how to think of a running time that is Ω(f(n))

- Important points of Big-Omega

- 3. Big Theta Notation

- Here’s how to think of a running time that is Θ(g(n))

- Important points

Asymptotic Notations are languages to express the required time and space by an algorithm to solve a given problem.

We can also define it as Asymptotic notation describes the running time of an algorithm for a given input. It is used to analyze the efficiency of the algorithm that is machine-independent.

- In Simple word, we can also define it as it is a function to describe the performance of an algorithm.

- We can’t provide an exact number which can define the time and space required by an algorithm when we analyze the complexity of any algorithm.

- So, We use standard notations, known as Asymptotic Notations, to express the required time and space by an algorithm.

- Asymptotic Notation helps to identifies the behavior of an algorithm on a given input or when the input size changes.

- Let us imagine that an algorithm as a function f and input size is n then the resultant running time for this algorithm would be f(n).

We can also plot a graph for this result using x and y-axis. The x-axis denotes the input size; Y-axis denotes the runtime and plot point denotes the resultants of the amount of time for a given input size.

There are three types of Asymptotic Notation

1). Big-O Notation

Big-O Notation is commonly denoted by O, is an Asymptotic Notation for the worst-case analysis of an algorithm.

It defines an upper bound of an algorithm. Let’s take an example of Insertion Sort. For the best case, it takes linear time, and for the worst case, it takes quadratic time. We can demonstrate it using its time complexity for both cases.

In the best case, the time complexity is O(n) comparisons, and in the worst case, it is О(n2). Normally time complexity of insertion sort is considered as O(n^2) because always we analyze the algorithms in the worst case.

In Big O, O stands for ‘order of’ which is concerned with what happens for large values of n. For example, if any sorting algorithm performs n^2 operations to sort the n elements, then that algorithm would be described as an O(n2) algorithm.

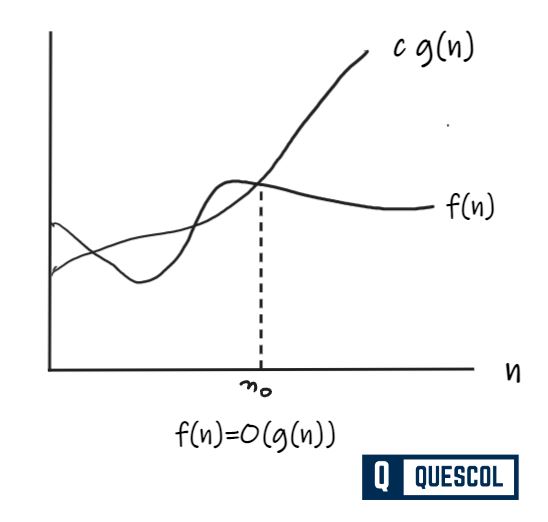

If the functions f(n) and g(n) are defined on a positive integer number n,

- f(n) = O(g(n)) iff there are two positive constants c and n0 such that |f(n)|≤ c|g(n)|for all n ≥ n0

- If f(n) is non-negative then we can simplify the condition to 0 ≤ f(n) ≤ cg(n) for all n ≥ n0

- We can say that “f(n) is big-O of g(n).”

- As n increases, f(n) grows no faster than g(n). In other words, g(n) is an asymptotic upper bound on f(n).

Constant c depends on the following factors

- The programming language you are using

- For the all its member’s Structure allocates storage space separately.

- Quality of the compiler or interpreter

- Size of the main memory

- Access time to the main memory

The general stepwise procedure for Big-O runtime analysis is as follows

- Figure out what the input is and what n represents.

- Express the maximum number of operations, and the algorithm performs in terms of n.

- Quality of the compiler or interpreter

- Remove all the constant factors.

If f(n) ≤ cg(n), c > 0, ∀ n ≥ n0

, then f(n) = O(g(n)) and g(n) is an asymptotically tight upper

bound for f(n).Example:

n2 + n = O(n3) Here, we have f(n) = n2 + n, g(n) = n3 Notice that if n ≥ 1, n ≤ n3 is clear. Also, notice that if n ≥ 1, n2 ≤ n3 is clear.

Side Note:

In general, if a ≤ b, then na ≤ nb whenever n ≥ 1. This fact is used often in these types of proofs.

Therefore,

n2 + n ≤ n3 + n3 = 2n3

We have just shown that n2 + n ≤ 2n3 for all n ≥ 1

Thus, we have shown that n2 + n = O(n3) (by definition of Big-O, with n0 = 1, and c = 2.)

Some of the useful examples on Big-O notation analysis are as follow

| g(n) | f(n) = O(g(n)) |

|---|---|

| 10 | O(1) |

| 2n3 + 1 | O(n3) |

| 3n2 + 5 | O(n2) |

| 2n3 + 3n2 + 5n – 10 | O(n3) |

According to Big O notation, we have five different categories of algorithms

- Constant time algorithm: running time complexity given as O(1)

- Linear time algorithm: running time complexity given as O(n)

- Logarithmic time algorithm: running time complexity given as O(log n)

- Polynomial-time algorithm: running time complexity given as O(nk) where k > 1

- Exponential time algorithm: running time complexity given as O(2n)

2. Big Omega Notation

Big-Omega is commonly denoted by Ω, is an Asymptotic Notation to denote the best case analysis of an algorithm.

- Ω notation provides an asymptotic lower bound.

- Sometimes, we want to say that an algorithm takes at least a certain amount of time, without providing an upper bound.

- We use big-Ω notation to show that least certain amount of time for an algorithm.

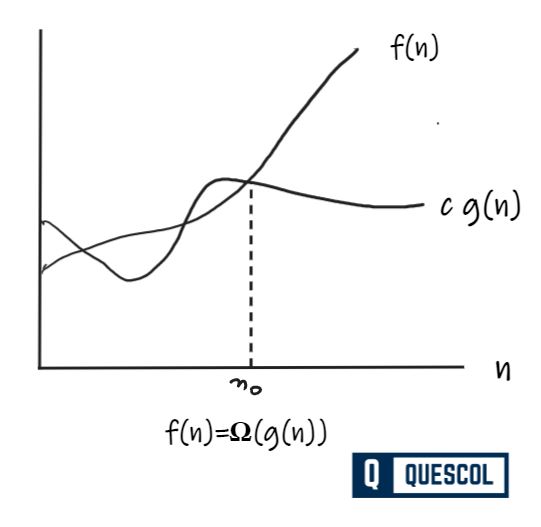

- If running time is Ω(f(n)), then for large enough n, the running time is at least k. f(n) for some constant k.

Here’s how to think of a running time that is Ω(f(n))

f(n) = Ω(g(n))

- if there are two positive constants c and n0 such that |f(n)|≥ c|g(n)| for all n ≥ n0

- If f(n) is nonnegative, then we can simplify the condition to 0 ≤ cg(n) ≤ f(n) for all n ≥ n0

- We say that “f(n) is omega of g(n).” As n increases, f(n) grows no slower than g(n). In other words, g(n) is an asymptotic lower bound on f(n).

- If f(n) >= cg(n), c > 0, ∀ n ≥ n0 , then f(n) = Omega(g(n)) and g(n) is an asymptotically tight lower bound for f(n).

Example:

n3 + 4n2 = Ω(n2) Here, we have f(n) = n3 + 4n2, g(n) = n2 It is not too hard to see that if n ≥ 0, n3 ≤ n3 + 4n2 We have already seen that if n ≥ 1, n2 ≤ n3 Thus when n ≥ 1, n2 ≤ n3 ≤ n3 + 4n2 Therefore, 1n2 ≤ n3 + 4n2 for all n ≥ 1 Thus, we have shown that n3 + 4n2 = Ω(n2) (by definition of Big-Ω, with n0 = 1, and c = 1.)

Important points of Big-Omega

- Best case Ω Of an algorithm describes a lower bound for all combinations of input. It implies that the function can never get any better than the specified value. For example, when sorting an array, the best case is when the array is already correctly sorted.

- Worst case Ω describes a lower bound for worst-case input combinations. It is possibly greater than the best case. For example, when sorting an array, the worst case is when the array is sorted in reverse order.

- If we simply write Ω, it means the same as best-case Ω

3. Big Theta Notation

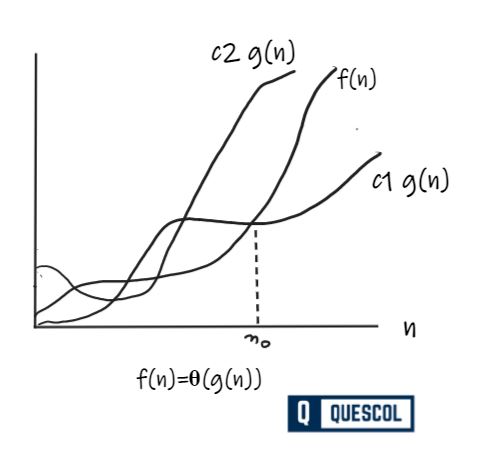

Big-Theta is commonly denoted by Θ, is an Asymptotic Notation to denote the average case analysis of an algorithm. The theta notation defines exact asymptotic behavior and bounds a function from above and below.

Here’s how to think of a running time that is Θ(g(n))

f(n) = Θ(g(n))

- iff there are three positive constants c1, c2 and n0 such that

- c1|g(n)| ≤ |f(n)| ≤ c2|g(n)| for all n ≥ n0

- If f(n) is nonnegative, we can simplify the last condition to 0 ≤ c1 g(n) ≤ f(n) ≤ c2 g(n) for all n ≥ n0.

- We say that “f(n) is theta of g(n).” As n increases, f(n) grows at the same rate as g(n). In other words, g(n) is an asymptotically tight bound on f(n).

Example:

n2 + 5n + 7 = Θ(n2) Where f(n) = n2 + 5n + 7 g(n) = n2 When n ≥ 1, n2 + 5n + 7 ≤ n2 + 5n2 + 7n2 ≤ 13n2 When n ≥ 0, n2 ≤ n2 + 5n + 7 Thus, when n ≥ 1 1n2 ≤ n2 + 5n + 7 ≤ 13n2 Thus, we have shown that n2 + 5n + 7 = Θ(n2) (by definition of Big-Θ, with n0 = 1, c1 = 1, and c2 = 13.)

Important points

- The best case in Θ notation is not used.

- Worst case Θ describes asymptotic bounds for the worst-case combination of input values.

- If we simply write Θ, it means the same as the worst case.